All my storage needs, public cloud aside, are now consolidated into a Network Attached Storage (NAS) box I built last year using FreeNAS. It has the equivalent of RAID5. However, whichever redundancy designed into a single NAS still won’t address the risk of a catastrophic installation failure. It would be better to have remote replication.

For the uninitiated, let me explain a little about RAID protection.

RAID5 provides for a single disk redundancy. If any one drive fails, your data is safe, because you haven’t lost data. Technically, we’d say your data is now “at risk”, because you now have no protection. The RAID volume is degraded in this situation. If a second disk were to fail before you replace that first failed disk and rebuild the data redundancy, you would have lost your data.

Is such a double disk failure common? If your original set of RAID5 drives are of the same brand/model, were bought at the same time, from the same shop, then it’s likely they were all manufactured at the same time from the same factory assembly line. After you put the drives into use, they’ve all undergone the same number of boot cycles and largely see the same drive operations. If one drive fails, there’s a good chance another will fail soon. In this scenario, RAID5 isn’t terribly safe.

RAID6 addresses some of those risks by providing a second parity disk. After the first drive failure, your data is still protected by one more redundant disk. You lose data when the third disk fails.

None of these protections, even if you use RAID50 or RAID60, etc, matters in the event of a catastrophic NAS failure. For example, a power surge through the hardware may have damaged all hard drives simultaneously, or a software bug may have catastrophically corrupted the data.

The best protection is to have remote replication to a separate NAS, preferably off-site, in another location. Remote replication does mean you need a second NAS, and that means significant additional cost.

I’ve been weighing the pros and cons of going with RAID6, or investing instead in remote replication. The latter is better, but it’ll cost even more. I was considering low-end boxes like embedded single-board computers which I had used to build my pfSense gateway. Slow is alright, since these will serve only as replication slaves. Unfortunately, these systems tend to have very limited I/O expansibility options. A proper PC would be more flexible, but that will cost much more.

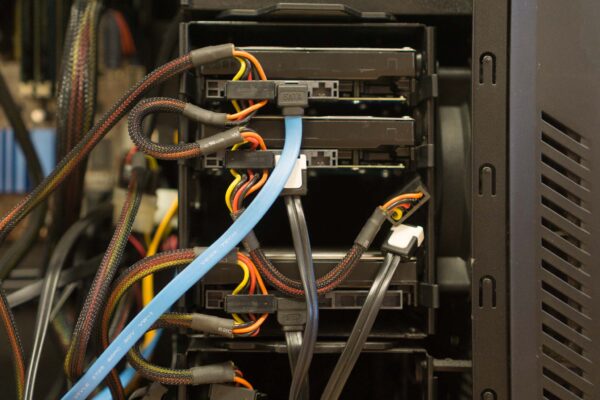

Unless, of course, you happen to have an old, spare, PC lying around. As it turned out, I did have an old PC that hasn’t been used for a while. It’s old, but yet modern enough to be able to run FreeBSD, and hence FreeNAS worked well on it. I even had old hard disks in there that were still workable.

That old PC became my FreeNAS replication slave. I just needed to get another SanDisk Ultra Fit Flash Drive to serve as the FreeNAS boot media, and it was good to go.

FreeNAS has built-in support for replication using ZFS’ send/recv features, so you don’t need to install anything extra. Just refer to the FreeNAS replication documentation for instructions. If the replicated FreeNAS slave is on a remote network behind a NAT, you may have some reachability hurdles to overcome, but there are certainly ways to overcome that. I made use of outbound SSH proxy connections from the slave to carry connections from master to slave. It’s a little inelegant, but ultimately works.

The public cloud is easy and convenient to use for most users. The cloud providers will sort out the redundancy, robustness and resiliency issues for you. However, if like me you want to own and control your own data, at least some part of them, and thus want to run your own cloud storage, the problem of redundancy and replication has to be something you’d need to take care of yourself.

It’s important to backup your precious data, but don’t forget that protecting your backup itself is also important.