I’ve run my personal blog website for over ten years. Although it may be just a personal website, not a busy e-commerce platform or high-security outfit, I’ve always made sure it’s run professionally, and I’m always happy to embrace the best industry standards and practices.

The web is not static. The Internet itself also evolves rapidly. But in the interest of maintaining backward compatibility, most legacy technologies continue to be supported. Since the old things still work, most website owners don’t see a need to change. The web, and even the Internet itself, is often very accommodating of non-compliant and misbehaving things, that even when something is so broken it shouldn’t work at all, they still do.

Such is the case with HTML itself, for example. In the old days, people coded webpages to suit Internet Explorer. Some people were knowingly writing non-compliant HTML to take advantage of quirks in browser behaviour, while others just didn’t care, so long as the webpage sort of rendered okay.

Back in those times, I greatly minded about HTML spec compliance. I wrote about wanting XHTML 1.0 Transitional compliance in 2007. I used to hand-write HTML before adopting WordPress in 2007. Nowadays I’m dependent on WordPress and a bunch of plugins, so I can’t always achieve 100% compliant output, but I do try. For the most part, this blog is HTML5 compliant. I take pride in this because many major websites would have spewed out validation errors in the two- or three-digit number range.

Many of us access the Internet using our smartphones and tablets, possibly more so than with desktop computers and notebooks, or even exclusively so. In this mobile-centric world, it’s important to position websites to be mobile-friendly. I made sure my website passed Google’s Mobile Friend Test tool back in 2015. I also adopted Google’s Accelerated Mobile Pages (AMP) format in early 2016 when a WordPress AMP plugin became available.

While most websites choose to use SSL only when security is absolutely needed, I begun to put HTTPS to secure my blog website in early 2015. That’s even before Let’s Encrypt begun operation to issue free SSL certificates to anyone who asks. Today, Let’s Encrypt has contributed to the increased use of HTTPS on the web. It’s a good thing. It’s free. However, HTTPS still isn’t quite near everywhere yet. Many websites just don’t see the need to change the status quo.

Just any TLS certificate isn’t enough. In March 2016, I switched from the usual RSA-based certificates to ECDSA-based certificates. I know, it doesn’t matter to most people, but there are benefits, beyond just security, to using ECDSA-based certificates.

On other fronts, the web has since upgraded from the HTTP/1.1 protocol that’s been in use for a really long time (since Jan 1997 if we go by the publication date of RFC 2068). A major upgrade to HTTP, in the form of HTTP/2, arrived in 2015 with the publication of RFC 7540. HTTP/2 was turned on for this blog website in early 2016, despite having to jump through some hoops to get things working in an easily maintainable manner in my environment.

HTTPS and HTTP/2, among other things, are easy to adopt for some websites that leverage on cloud proxy services like CloudFlare, because it’s CloudFlare that’s doing the work. It might have been a bit more work for other website owners to adopt HTTP/2, in particular, or even HTTPS.

As a system administrator, I like to look at network, disk, and system level performance metrics. I know that’s not the whole story. I talk about this topic regularly, and wrote about this in two parts back in 2015 (part 1 and part 2). Finally in early 2016, I took concrete steps to put into practice what I preach, using Google’s mod_pagespeed plugin for Apache.

With some hand-tweaking, this blog website scores very favourably on Google’s Pagespeed and Yahoo’s Yslow tests. With GTmetric’s test performed from Vancouver, to my server’s physical location in Singapore, I get a Contentful Paint in 0.8 sec, the Onload event in 1.2 sec, and full page loaded in just 1.6 sec. Hit up your favourite websites on GTmetrix to see how they score! Go ahead, you’d see how sadly some “major” websites score.

The latest update today includes a bunch of things, all related to security.

First, the zitseng.com domain is now DNSSEC enabled. This can be verified with Versign’s DNSSEC debugger. If you use a DNSSEC-enabled resolver, you can be sure you’re getting to the legit server for https://zitseng.com/. This is a separate matter from using HTTPS.

The next thing is related to HTTPS. You know HTTPS websites require a SSL (or nowadays more properly called TLS) certificate. This domain now uses a CAA record to publish administrative restrictions on certificate issuance for the domain. In principle, you should not find a TLS certificate for this website issued by a Certificate Authority that hasn’t been permitted by the CAA record.

I have since 2015 enabled HSTS. HSTS, or HTTP Strict Transport Security, is a web security mechanism that informs web browsers, including other HTTP user agents, that they should interact with a website using only HTTPS connections. If you type http://… accidentally, your browser will know to convert that to https://… automatically. However, the browser needs to make one http://… attempt first to learn about the HSTS policy. Not anymore soon.

This domain zitseng.com is now signed up in the HSTS Preload list. Basically, some domain names will be hardcoded into the browser (Chrome, Firefox, etc) so that even before the very first ever http://… attempt, the browser already knows it must use https://… instead. I’ve already seen the change in the Chrome browser source code commit (oh yes, I’m sorry I’ll be taking up a dozen or so bytes of space in your browser), and it should roll out into the next stable release of Chrome, and other browsers (Firefox, for example) that subscribes to this HSTS Preload list.

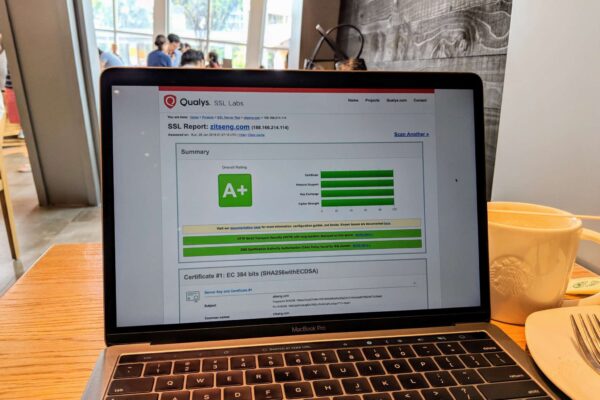

Last, but not the least, I’ve decided to make the final change to hit the perfect score on the SSL Lab’s SSL Test. I’ve had an “A+” since, perhaps, forever. But it’s now better yet. I realised that there’s effectively no end-user difference, in terms of client compatibility, to level up to a perfect 4x 100%.

So with the tweaks explained here, I present this blog website with a perfect SSL Lab’s SSL report. There isn’t anything top-secret, highly confidential, or what not contained in here. But that doesn’t mean we shouldn’t need to be mindful about good security.

That, and all the other stuffs I’ve mentioned, they’re not required. Your website will still work without them. Question is, do you want a website that only just works, or one that stays abreast with the latest developments in the Internet and web technologies?