I’ve been running my WordPress blog for about 9 years. During this time, there have been many changes, both front-facing ones as well as the underlying technical bits. As a techie, I like to build and tweak those underlying layers. This post is a sort of a status update of how this blog site is currently setup.

There’s been a lot of changes in the last few months, including a change in hosting providers, and a change in the Linux distribution. You might perhaps be able to tell that I’m now hosted on DigitalOcean’s cloud servers. Many people graduate from shared web hosting to cloud servers. Me too. The difference with me is that I originally started out self-hosting.

A short history. I originally ran my website off 1U rackmount servers co-located in a Singapore data centre. When the prices went up, I switched data centre. Eventually, the prices went up enough, and along with other reasons, I decided to give up self-hosting and moved to a shared web hosting provider. I moved a few more times, and most recently, prior to DigitalOcean, I was using DreamHost’s DreamCompute cloud servers.

DreamHost’s services were really good. However, for reasons of network round-trip latency between Singapore and where their data centres were located, I decided to move to a provider that had a data centre located in Singapore. Although I’d like to think that my blog has international reach, the reality is that most visitors are from Singapore, and so it makes sense for the server to be located in Singapore. The move to DigitalOcean’s SGP1 data centre happened less than one month ago.

This isn’t my first time using DigitalOcean. I had actually explored DigitalOcean when they first opened their Singapore data centre. I had, at that time, been particularly attracted with the planned rollout of IPv6. I had somehow ended up choosing DreamHost then.

If you’re interested, I made some recent comparisons between DreamHosts’ DreamCompute and DigitalOcean. I also checked out Linode. [If you want to try out DigitalOcean, do consider using my affiliate link. You’ll get US$10 credit to explore their services.]

The recent furry of changes were the result of some new goals I wanted to achieve with my website. I wanted to modernise the underlying technical bits of my website. My requirements:

- Must support HTTP/2

- Must support Google PageSpeed

- HHVM

- Easy maintenance

- Static IP address for SSL

- Support IPv6

It’s kind of implied that, of course, it will be Linux, and it will be a self-managed server platform. In other words, I’m not looking at shared web hosting anymore.

Notice that I don’t really care about whether I’ll run Apache or Nginx. I did run a comparison between Apache and Nginx which you may want to read about. I would have expected Nginx to do better, but it wasn’t. It was about the same as Apache, which was using mpm_event.

My HHVM requirement turned out to be met in a different way. You see, I had become outdated about PHP developments, and I didn’t know that PHP 7.0 was released late last year. It turns out, according to my own testing (which I realised I hadn’t yet blogged about), there was little difference between PHH 7.0 FPM and HHVM. Actually, the former seemed just a tad faster, was more consistent with speed, and used less memory.

There are some benchmarks on the Internet comparing PHP 7.0 to HHVM. The self-proclaimed definitive PHP 7 benchmark declared HHVM faster for WordPress, but another benchmark by Jeff Geerling found PHP 7 just a little faster in delivering WordPress homepage anonymously.

Since PHP 7.0 is the actual PHP itself, and WordPress is updated to support it, plus my own test results, I decided to go with PHP 7.0 FPM.

In case you’re a little lost, PHP-FPM (and HHVM similarly) is basically a FastCGI server that handles PHP scripts. Whenever Apache (or Nginx) needs to service a request to a PHP script, it hands over the request to an external server via the FastCGI protocol.

I also changed Linux distributions. I’ve been a long time CentOS user. I disliked Ubuntu because of all the things they did differently from what I was used to. I know, there are perhaps good and sound reasons for them, but I just didn’t want to have to deal with something different. CentOS 7.0, unfortunately, also started to do things differently. It’s still easier for me to master over Ubuntu. But I begun to get disappointed that CentOS tends to be less up-to-date with their prebuilt packages. Although I appreciate stability, but you see, I also want new stuff, stuff like HTTP/2. CentOS’ Apache was too old to support HTTP/2. I could use Nginx, but I didn’t want to compile Google PageSpeed into Nginx myself (there’s no packaged build of PageSpeed for Nginx available).

While I am perfectly capable of compiling software from source, I want to avoid that as far as possible. My goal of an easily maintainable platform means that I should only need to do “yum upgrade” (or “apt-get upgrade”).

A modern enough Apache that supports HTTP/2 meant I had to choose Ubuntu 16.04. Unfortunately, Ubuntu decided not to include HTTP/2 support in its packages. Small matter. I could compile just this one binary myself. It won’t affect how Ubuntu updates other packages anyway, including Apache.

Unfortunately, I ran into a bug, one that has been reported, and fixed in Apache 2.4.20. The bug is present in Apache 2.4.18 which ships with Ubuntu. The bug causes the httpd processes (or threads) to get stuck in “Gracefully Finishing” state upon shutdown, such as when you reload httpd, or when some worker processes gets killed to reduce the idle worker count. Eventually, everything gets stuck and Apache cannot spin up anymore workers. This is a showstopper. This was reported as bug 59078, which was fixed but introduced bug 59121, which was also fixed. The source of the problem is mod_http2, but to fix it, changes are also required to Apache’s core itself. At this rate, I’d have to build the whole Apache myself.

I reluctantly turned to Ondrej Sury’s PPA for Apache2. I was reluctant, because I didn’t want to have to depend on a third party for updates. But it’s between me doing it myself, and having someone else build Apache packages for me. I think, with the maintainability goal in mind, I’ll outsource the package building to him. A side-effect of using his PPA is that I don’t need to build mod_http2 myself.

My final WordPress stack at this time is as follows:

- DigitalOcean cloud server, hosted in their SGP1 data centre

- Ubuntu 16.04

- MariaDB

- Apache2, using Ondrej Sury’s PPA

- Google PageSpeed Module

- PHP 7 FPM

- WordPress, of course

I tested. It’s speedy. This setup will typically spit out a WordPress homepage in 150 ms, tested from StarHub fibre broadband. My blog website had never been so fast before this.

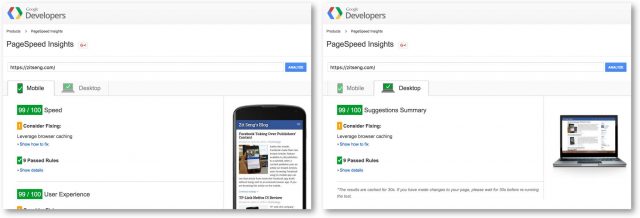

Google PageSpeed Module for Apache has helped to optimise how content is delivered to web browsers, so as to improve the end user experience. It basically does things like minifying stuffs, combining stuffs, inlining some stuffs, and many more. I’ve also had to tweak some parts of the CSS and Javascript to make things better, but PageSpeed Module really does most of the heavy lifting magic. My website is now scoring all 99 on PageSpeed Insights.

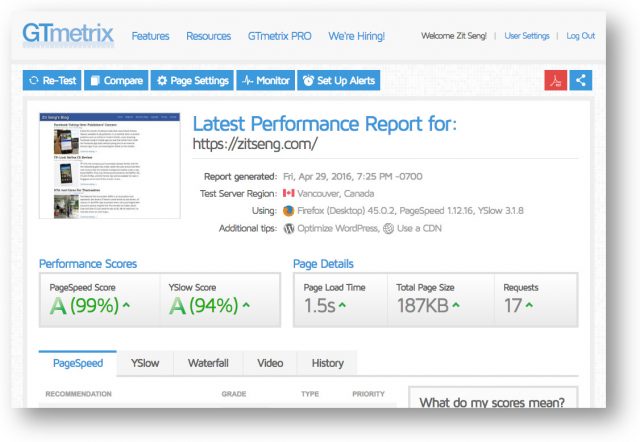

The end-user performance improvement is also affirmed by GTmetrix, which also shows a 94% score with YSlow. This test was performed from Vancouver, Canada, and a lot of the page loading slowness happens due to the initial connect, accounting for 423 ms of time. Subsequent requests happen much quicker due to connection reuse.

I’ve started to use ECDSA-based SSL certificates about two months ago. They are faster and stronger than the more common RSA-based SSL certificates. Not many sites use ECDSA-based SSL certificates, but Facbeook does, as do some of Google’s sites. Just about all modern web browsers already support ECDSA-based SSL certificates, but adoption of this new, faster and stronger certificate type is still slow. My website scores A+ on Qualys SSL Server Test.

The latest development here is the activation of Facebook’s new Instant Articles feature. If you read this post on your smartphone using the Facebook mobile app, you’ll notice that you’re consuming the content from within the Facebook app itself. You didn’t get sent to an external web browser to load my webpage. This is all part of improving the end user experience.

That wraps up about all the changes and developments that have been happening over the last couple of months. The various optimisations have made the site run faster, and at the same time, my cloud server seems like quite oversized for the kind of load it actually receives. However, I suppose it never hurts to be prepared for a sudden onslaught of traffic.

View Comment Policy